ChatGPT is receiving attention as the EU intensifies its calls for more regulation. Is it’s new AI Act sufficient?

Legislators are determining how to control artificial intelligence.

According to an EU official, proposed regulations for artificial intelligence (AI) will address worries about the dangers of products like ChatGPT.

According to Thierry Breton, the European Commissioner for the Internal Market, the hazards associated with applications like ChatGPT and their quick surge in popularity highlight the urgent need for regulations to be put in place.

“As demonstrated by ChatGPT, AI technologies can present both threats and fantastic potential for corporations and citizens. This is why we require a strong legal framework to guarantee reliable AI based on high-quality data, he said in written comments to Reuters.

They were a top EU official’s first official remarks about ChatGPT. The European Council and the Parliament are currently working with Breton and his Commission colleagues on what will be the first legal framework for AI.

ChatGPT, which was introduced a little more than two months ago, has sparked an explosion of interest in AI and the applications it can now be used for.

Users can enter questions into ChatGPT, which was created by OpenAI, to produce content like as articles, essays, poetry, and even computer code.

Experts have expressed concerns that the technologies employed by such apps could be abused for plagiarism, fraud, and disseminating false information, with ChatGPT being designated the fastest-growing consumer app in history.

Microsoft chose not to respond to Breton’s assertion. An inquiry for comment was not immediately answered by OpenAI, whose software makes use of the generative AI technology.

In an effort to create safe and useful AI, OpenAI claims on its website that it seeks to construct artificial intelligence that “benefits all of humanity.”

ChatGPT is being used by students for homework. Should schools embrace or outlaw AI tools?

first regulatory framework for AI

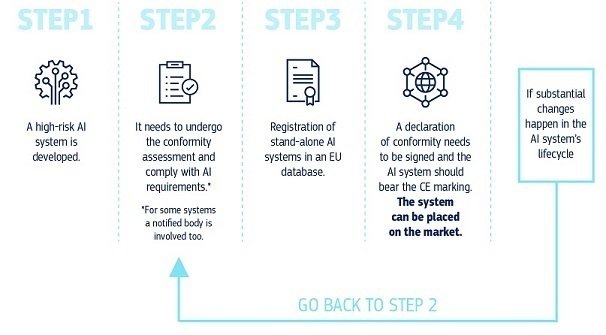

According to the proposed EU regulations, ChatGPT is regarded as a general-purpose AI system that may be applied to a variety of tasks, even risky ones like credit scoring and the selection of employment prospects.

In order for downstream developers of risky AI systems to comply with the proposed AI Act, Breton wants OpenAI to work closely with them.

The regulatory system currently categorizes artificial intelligence (AI) risk into three categories, which is unsettling some businesses that worry that their products may be classified as high risk.

There are three levels:

undesirable risk

According to the Commission, any system deemed to pose a clear risk to individuals “would be outlawed,” including “social scoring by governments to toys utilizing voice assistance that encourages harmful behavior.”

High danger

These are AI systems that potentially influence the results of exams or job applications in vital infrastructures like transportation or in contexts related to education or employment. Situations involving law enforcement that jeopardize people’s fundamental rights are likewise considered high risk.

Low risk systems include those that have “particular transparency obligations,” such as a chatbot that declares its AI status.

Little to no risk

The “vast majority” of systems presently in use in the EU, according to the Commission, fall into this category. Examples include spam filters and video games with AI support.

People would need to be made aware that they are speaking with a chatbot rather than a real person, according to Breton.

Transparency is crucial in light of the possibility of bias and incorrect information.

According to CEOs of various organizations working on artificial intelligence development, falling into the high-risk category would result in stricter compliance requirements and greater expenditures.

According to a poll conducted by the industry group applied AI, 51% of respondents anticipate a slowdown in their AI research efforts as a result of the AI Act.

Microsoft is reportedly planning to invest in OpenAI as ChatGPT access is expanded.

According to Microsoft President Brad Smith in a blog post published on Wednesday, the focus of effective AI laws should be on the applications that pose the greatest risk.

Regarding how humans would use AI, he admitted that “there are days when I’m optimistic and moments when I’m pessimistic.”

To produce a good response, generative AI models need to be trained on massive amounts of text or images, which can give rise to claims of copyright violations.

Breton stated that these topics would be covered in upcoming negotiations with politicians about AI regulations.